That is not good, cake-autorate expects that you actually already have one or two cake instances that are up and running, but if you specify 0 for upload or download sqm-scripts will not instantiate a cake instance for the respective direction...

@moeller0 is there a way to ascertain an optimal update interval for updating the CAKE bandwidth?

Let’s take an extreme example. It surely is of no benefit to update the CAKE bandwidth 10,000 times a second (even if we somehow had perfect tracking of the connection bandwidth). So let’s reduce that frequency. But by how much is optimal?

This answer will surely hinge on the implementation specifics of the cake qdisc.

At the moment we simply update every ICMP or LOAD response.

But I am thinking it might be better to update the CAKE bandwidth:

-

immediately, if there is bufferbloat detected; or otherwise

-

once the update interval has elapsed.

And to be clear, I’m not thinking of skimping on the internal updates of the shaper rates in cake-autorate. Rather I am wondering how often to make the tc qdisc change calls to pass the latest value on to the cake qdiscs.

Define your optimality criteria first?

Yes, our rationale is that increasing the shaper rate (above the baseline) is something we only want to do if there is actual demand, and we use the ratio of achieved over shaper rate as proxy for demand (with some fudge factor to increase the shaper before 100% utilisation is reached). Since the load information is updated at a given interval the fastest/most reactive our controller can behave is to act immediately after each load datum is acquired. (There is a limit to useful load sampling rates as the achieved load needs to average over a few packets worth of transmission time, if we sample too fast achieved load will jump between 100 and 0%; I think we implemented some heuristic to make the sample period not too small)

And for shaper rate reductions, we evaluate each ICMP result ASAP, so we do not miss the start of an congestion epoch (but use a refractory period to give the load a bit of time to adjust to the changed shaper rate...)

That is what we do as a response to the RTT/OWD measurements ASAP already...

Not sure what update interval is, but for the baserate we already do that on a clock IIRC, no?

Well, for rate decreases we already have the refractory period that will help, so we are essentially talking about the load dependent rate increases, no?

The thing is, we already increase the shaper rate at IIRC 75% load or so, so that the shaper ramps up pretty quickly as not to slow down data transfers. Your request would essentially result in slower ramp up time... at which point I wonder whether it might not be simpler to just increase the load% at which we increase the shaper rate to 90 or 95%?

High frequency tc qdisc change calls are computationally costly because 'tc' is an external binary. Here is an example showing the effect at 20Hz (circa 15% CPU consumption):

root@OpenWrt-1:~# time for((i=600;i--;)); do read -t 0.05; done

real 0m30.129s

user 0m0.041s

sys 0m0.058s

root@OpenWrt-1:~# time for((i=600;i--;)); do tc qdisc change root dev wan cake bandwidth "10000Kbit"; tc qdisc change ro

ot dev "ifb-wan" cake bandwidth "10000Kbit"; read -t 0.05; done

real 0m34.617s

user 0m3.027s

sys 0m1.746s

How about implementing a rate increase refractory period and simultaneously increase the shaper rate increase factor to give the same net increase rate of change per unit time as we have now. Perhaps this would be best?

What might be an optimal increase_refractory_period_ms (assuming that the increase factor is updated to give the same increase rate per unit time as we have now)?

Get a faster CPU then ![]()

How about just driving rate increases from the LOAD reports, or are we doing that right now? After all, if the load is at 76% and our thresholds is at 75% we will increase the rate anytime we get triggered... with e.g. 50ms average inter ICMP delay and 200ms load sampling period we get 4 increase steps instead of 1, all based on the same information about the load...

Nah, we need to be cautious here, our goal is to keep the rate increase dynamic 'slower' than the rate reduction dynamics to avoid oscillations and bad resonance effects...

Not a fan of that, really. As I see no clear way to size that parameter, but see above I think using the load sampling interval has some merit, as we are prone to double dip if we base 4 rate increases on the same load data...

That way the load sampling period acts as natural increase refractory period...

I like this idea.

So increase and decay changes would be driven by LOAD reports, and bufferbloat changes and starlink compensation changes would be driven by ICMP responses?

What’s the difference between that and setting refractory interval of 200ms?

Here depending on how you select the refractory period length in relation to the load sampling period you still can have double dipping...

And you have one more parameter to tune compared to using the load sampling.

The only question I have is, at low rates we extend the load sampling period already so that might possibly end up with large temporal steps between rate increases. But then at really low rates all we can do is manage the pain anyway...

How about we try dring this by LOAD samples first* and if that does not help, consider the additional refractory period?

Maybe with a simple counter of rate increase attempts, that is reset on every new LOAD datum to zero and we only change the rate(s) if the counter (for the relevant direction) is equal to zero? (And also increase the counter after having increased the rate first inside the current LOAD epoch...)

Hmm yes, cake with cake-autorate for sure helps Starlink connections, albeit the satellite switching compensation code hasn’t been tested enough to be sure we are doing the right thing.

thank you lynx yes I think I will apply sqm simply a little below its starlink throughput average and not take the risk despite the fact that this idea for starlink is great,

Won’t work. Starkink capacity varies way too much as your own tests there shows.

ok I see I have to base myself on that so in this starlink file it is the only one chosen to modify the values

Hmm but isn’t it a bit mixed up to use refractory period for decay in load idle/low but then use this separate mechanism for increases?

Wouldn’t it be more consistent to have simple increase refractory period?

Or have both increase and decay subject to this new mechanism?

What’s the double dipping phenomenon?

So shaper rate increase and (congestion driven) decrease operate on different principles hence no real reason for them using a symmetric configuration.

The move to baseline approach really just needs a 'constant enough tick'# (as it only should trigger if load is below the rate increase threshold and there is no increased delay*) whether that is 1000ms or 200ms does not really matter all that much as we can adjust the rate adjustment factor to get similar behaviour for different length tick periods.

#) Having some tick slack is fine, as we only want to reach badeline rates eventually, with loose bounds on the precise time...

*) Not sure wheter we currently require one

decay_refractory_period_ms after the last shaper change before we start moving the shaper rate towards the baseline rates... that would make some sense...

Only after the last decay at present. Should it be after any other change too (so after increase, decay, bufferbloat or starlink compensation)?

For the increase modification I’m thinking of retaining a simple updated_achieved_rates[${direction}] array that is set to 1 after a LOAD report and then shaper rate increases for each direction are made subject to the value of 1 and then the value is set to 0 after any increase.

And I’ll update the default shaper rate increase factor to keep the same effective increase per unit time.

Right now we have the increase factor set to 1.01 and it is applied every 50ms. But now it will be applied every 200ms. So the increase factor should now be 1.04 (1.01^4), right?

Does this seem OK?

By the way, at present shaper rate updates are instigated with each ICMP response. I could try to shake things up so that increases or decays are applied with LOAD reports and then bufferbloat or starlink compensation is handled with ICMP responses. But that’d be a bigger change. Any thoughts on that?

One factor that seems significant is that LOAD reports are much more regular than ICMP responses.

I guess rate increases or decreases driven by load and congestion certainly should reset the timer (we just changed the shaper for an immediate reason, so we probably do NOT want to regress to the base rates right now).Practically that is unlikely to have that big an effect as typically decay_refractory_period_ms is relatively long and shaper_rate_adjust_up_load_low and its down counterpart? are rather small, but conceptually it just does not feel right.

Starlink compensation probably falls into the same bucket, we just fiddled with the rate? so let's not fudge the rate for a longer term goal.

That is fine as well, a boolean or a counter will work just fine...

Sure, why not, but let's not do that automatically, just change the default value as that is something users might want/need to play with, no?

If the current rate of re-approaching the base rate feels about right than this looks like a decent small change, but I would guess the exact number does not really matter all that much here... as long as we reach the base ratres eventually? (That said, I would exactly do what you propose).

But wasn't that you idea, to change how we address rate increase steps? I would guess that the updated_achieved_rates[${direction}] trick would work immediately, just make shaper rate increases dependent on the respective updated_achieved_rates[${direction}] being 0?

And '. I could try to shake things up so that increases or decays are applied with LOAD reports' sounds wrong, rate decreases should still be driven by delay data and preferably ASAP ![]() it is the rate increases that should essentially only happen once per LOAD sampling interval, but then it is not that critical whether we drive this from the LOAD report directly or whether it gets triggered by the first delay sample after the LOAD update, no?

it is the rate increases that should essentially only happen once per LOAD sampling interval, but then it is not that critical whether we drive this from the LOAD report directly or whether it gets triggered by the first delay sample after the LOAD update, no?

Yes, but is that really all that important? If we restrict rate increases to once per LOAD period, on average the rate will be 1/LOAD_period even if we do not actually drive it from the LOAD report reception?

I've been using cake-autorate with Starlink for quite a while now. Here's my current config:

#!/bin/bash

# *** STANDARD CONFIGURATION OPTIONS ***

### For multihomed setups, it is the responsibility of the user to ensure that the probes

### sent by this instance of cake-autorate actually travel through these interfaces.

### See ping_extra_args and ping_prefix_string

dl_if=ifb4eth0 # download interface

ul_if=eth0 # upload interface

# Set either of the below to 0 to adjust one direction only

# or alternatively set both to 0 to simply use cake-autorate to monitor a connection

adjust_dl_shaper_rate=1 # enable (1) or disable (0) actually changing the dl shaper rate

adjust_ul_shaper_rate=1 # enable (1) or disable (0) actually changing the ul shaper rate

min_dl_shaper_rate_kbps=10000 # minimum bandwidth for download (Kbit/s)

base_dl_shaper_rate_kbps=120000 # steady state bandwidth for download (Kbit/s)

max_dl_shaper_rate_kbps=250000 # maximum bandwidth for download (Kbit/s)

min_ul_shaper_rate_kbps=2000 # minimum bandwidth for upload (Kbit/s)

base_ul_shaper_rate_kbps=20000 # steady state bandwidth for upload (KBit/s)

max_ul_shaper_rate_kbps=40000 # maximum bandwidth for upload (Kbit/s)

dl_delay_thr_ms=40.0 # (milliseconds)

ul_delay_thr_ms=40.0 # (milliseconds)

#sss_compensation=1

# *** OVERRIDES ***

### See cake-autorate_defaults.sh for additional configuration options

### that can be set in this configuration file to override the defaults.

### Place any such overrides below this line.

# *** DO NOT EDIT BELOW THIS LINE ***

config_file_check="cake-autorate"

I actually no longer use the Starlink compensation code and am not sure it is necessary. When that code was added (a year ago?) Starlink showed excessive buffering for the period around the time of the satellite switches, which occur on a predictable schedule as often as every 15 seconds. SpaceX has recently made changes which seem to have improved their latency significant by reducing some of this bufferbloat. I haven't tried detailed latency tests myself since those changes but they have a whitepaper here about the changes.

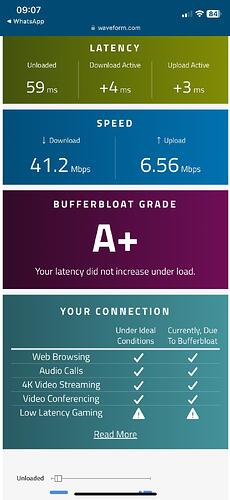

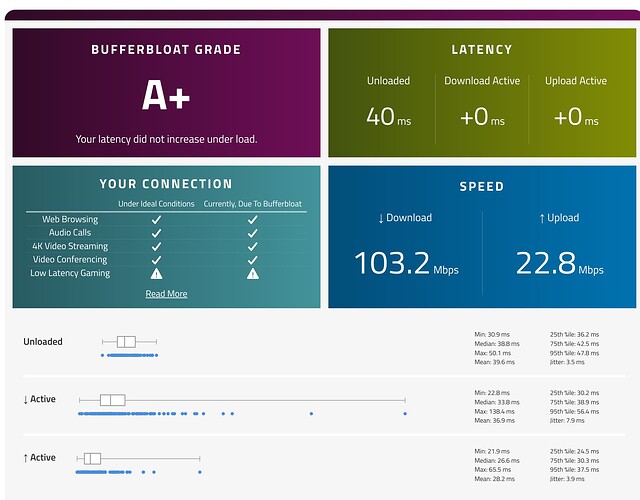

Here's a waveform test with my config. This was during the daytime, when Starlink probably has excess capacity:

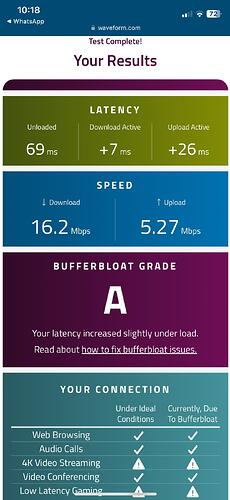

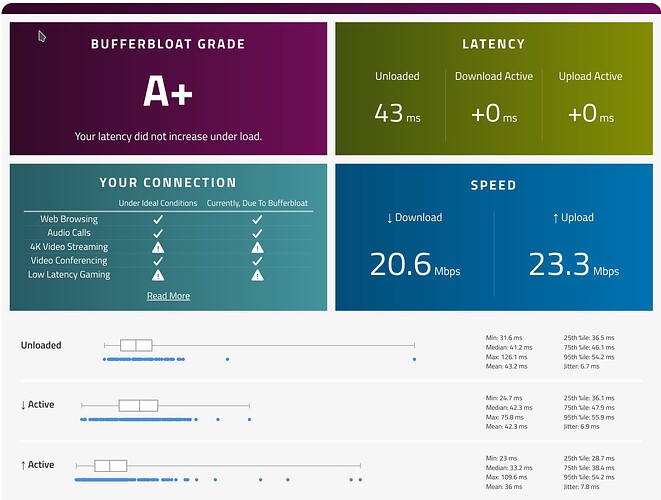

Here's a waveform test last evening, during a time when Starlink's capacity is likely constrained because of so many people streaming over the satellites:

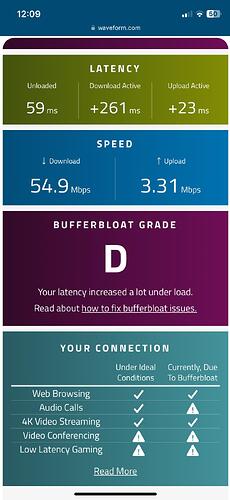

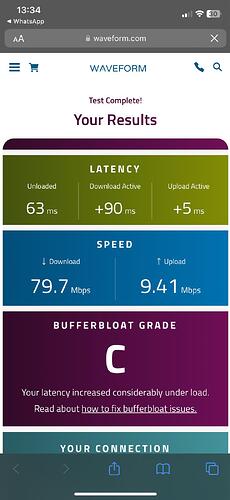

I just tried turning cake-autorate off to see what would happen, as I haven't tried that in a while. It is daytime here now so Starlink has excess capacity. It still performed really well with an A+ for bufferbloat and it showed 0 ms excess latency under load for both download and upload. I suspect, though, that without cake-autorate the bufferbloat would get bad in the evening when the bandwidth is constrained on Starlink, as cake-autorate helps out in that time by turning down the bandwidth to preserve low latency. I guess I should try that again sometime to see what would happen.

+1; that would be quite interesting!

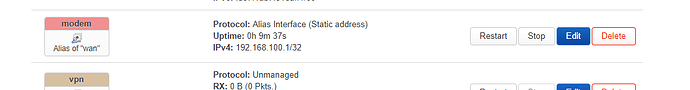

I thank you very much for your config so did you just modify this part? is your starlink in by pass mode? because I have starlink switch router, I used this to have internet I will share it with you

###STARLINK CONFIG INTERNET

uci add_list firewall.@zone[1].network="modem"

uci commit firewall

/etc/init.d/firewall restart

# Configure network

uci -q delete network.modem

uci set network.modem="interface"

uci set network.modem.device="@wan"

uci set network.modem.proto="static"

uci set network.modem.ipaddr="192.168.100.1"

uci set network.modem.netmask="255.255.255.255"

uci commit network

/etc/init.d/network restart

# Saving all modified values

uci commit

reload_config

M'y config actually

@gba can you share your /etc/config/sqm

Yes, I'll try to remember to try that tonight.